Intro

This is my first stop on the Power Platform learning train. As I mentioned in the first post, this seems like a significant moment to me. Over the last few versions users have had more control over the web client. Between the Personalise and Design options you can tweak a lot of the elements on a page.

If you wanted to introduce some logic you could do that through Power Automate. Trigger a flow in response to some event in Business Central, call some other service, post some data back to BC. Lovely, but all a little hidden away from the Business Central UI (with the exception of approvals which remains a confusing hybrid of Power Automate and old Business Central workflow). If you wanted to add some Power Automate into the mix and make it prominent in the UI you still needed to do some AL development.

Not any more.

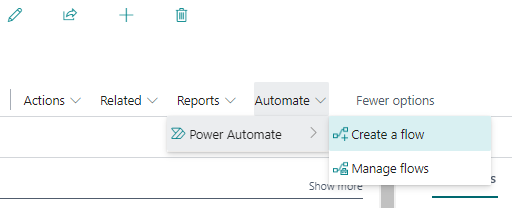

Automate Menu

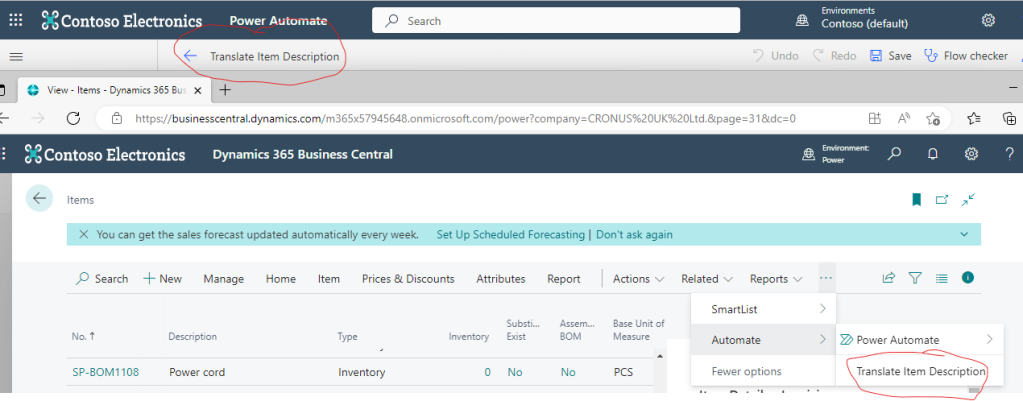

You might have noticed that there is an Automate menu pretty much everywhere now.

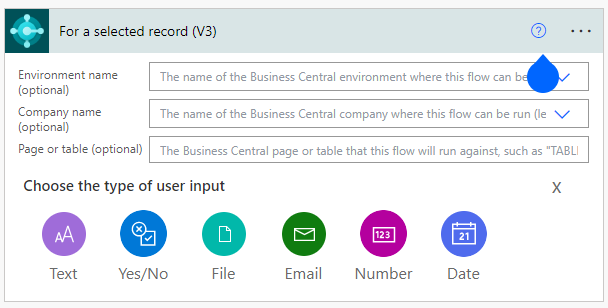

Let’s try clicking on Create a flow, see what happens. Power Automate is opened to a new flow with the Business Central trigger, “For a selected record (v3)”

You can see that there are few options for the trigger:

- Environment: either specify a single environment that this flow can be used in or leave blank to appear in all envirnments

- Company: specify a single company or leave blank for all

- Page or table: to determine which page(s) the flow can be triggered from

- Add some UI

Example: Translate Item Description

I want to add a flow to get the description of an item, translate into another language and update the record with the translation. I want the user to be able to choose the language that they want to translate into.

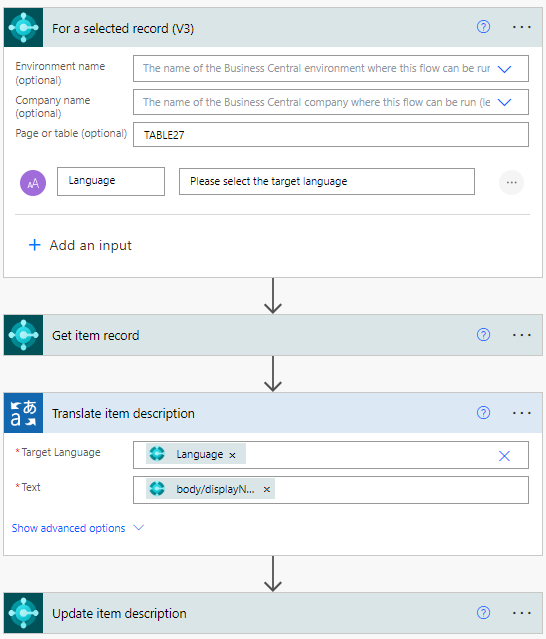

This is what the flow looks like:

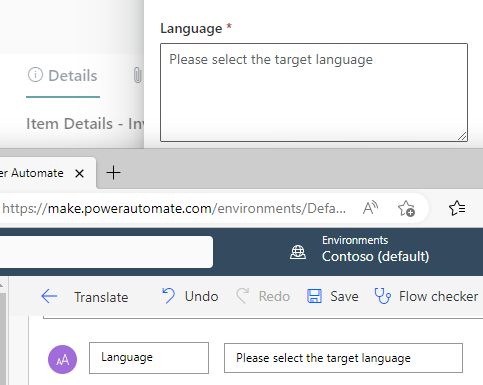

I’ve added a text input to the trigger to allow the user to enter the language that they want to translate to. I’ve also specified that this flow applies to “TABLE27” – so the item list and item card pages.

From there on:

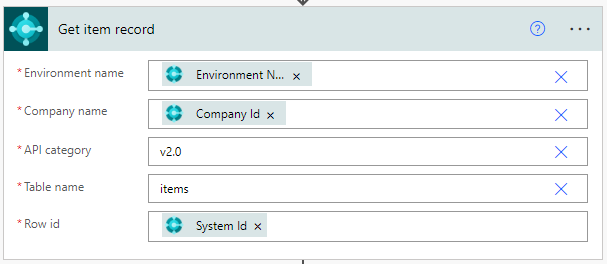

- Get the item record (the “for a selected record” trigger outputs the SystemId of the record that the flow was run from)

- Use a connection to Microsoft Translator (a free, throttled connection). Translate the item displayName (description) into the language entered by the user (the Language UI from the trigger is included as an output)

- Update the item record with the output of the translation

Let’s test it with the “Facia Panel with display” item. I’ll enter “ja” for Japanese as the target language for translation. (You can also define a list of valid values for the user to choose from rather than having free entry).

Notes

Use the Trigger Outputs

If you want to be able to use this flow in any environment and company don’t hardcoded those values in the get/update record actions. Use the output from the trigger instead.

Constructing PATCH Request

I’m constructing the request for the update record action with this expression. This is the part that seems the least low-code to me, but maybe there is an easier way to achieve this.

json(concat('{"displayName": "', outputs('Translate_item_description')?['body'], '"}'))Captions

The caption for the action is taken from the name of the flow.

The captions for the UI are set in the flow.

As an ISV we are always conscious of the languages that we need to support and captions that we need to translate. Unfortunately I couldn’t find anything about providing translations for these captions.

We do have the user id and name of the user that triggered the flow, so perhaps it is possible to retrieve the language of that user and adapt the UI but it definitely doesn’t look like something a consultant or citizen developer is going to do.

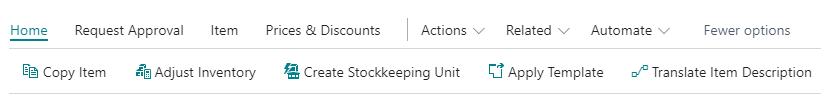

Personalise

When you personalise the page you can move the actions from the Automate action like other actions. I’ve dragged it into Home group here. Doesn’t look like you can choose an image for the promoted action though.

Thoughts

OK, so this is a trivial example, but even so I am impressed. You can add an action to specific pages, across all environments and their companies, with some UI, to integrate with external services without writing any AL code.

Power Automate handles most of the complexity of connecting to and authenticating with an external service for you.

For real use cases you might be more dependent on doing some API development in Business Central first to expose the correct data and bound actions, but that division makes sense to me. Handle the Business Central stuff in Business Central and handle the integration with other services in Power Platform.

How does that all hang together? How do you deploy an entire solution when that solution consists of an AL app, some Power Automate flows, maybe a Power App? What about source control? How do you manage credentials to external services when you deploy into another tenant? All good questions that I don’t have any answers to yet.