Intro

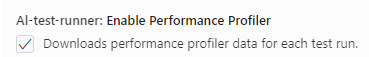

For a while now AL Test Runner has been able to download the code coverage details after running your tests, output a summary of the objects that were hit with some stats and then highlight the lines which were hit in the previous test run or the last time you ran all the tests. More in the docs.

Recently, VS Code has added an API for test extensions to feed data into and some UI to show the coverage. It’s pretty cool.

Test Coverage

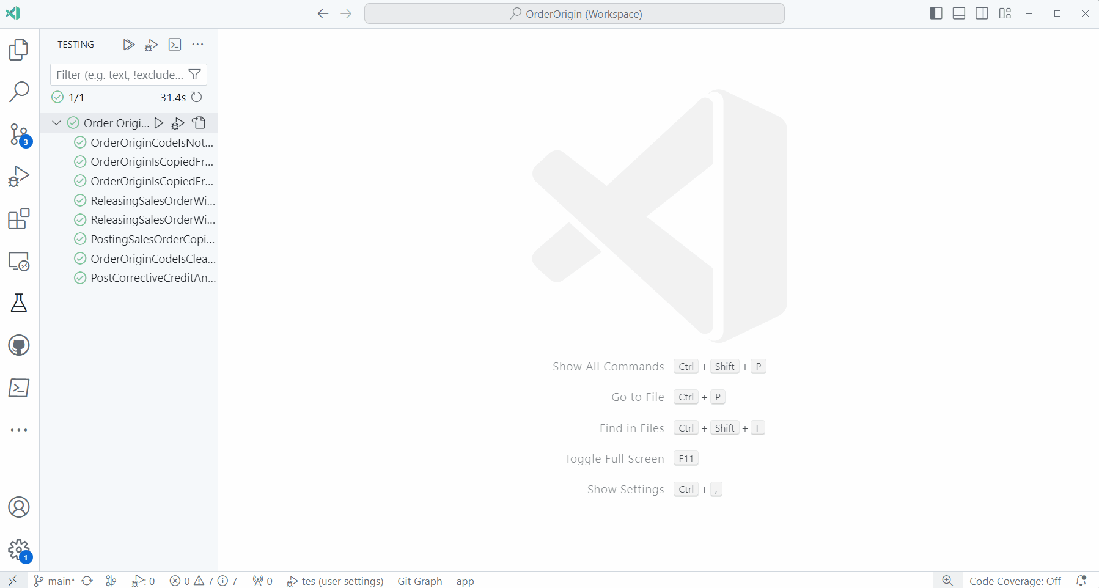

The first thing you’ll notice is this “Test Coverage” panel which is displayed after the tests have run. It displays a tree of the ojbects which have been hit by the run and the percentage coverage (in statement coverage terms).

If you click on a file in the tree it will open the file in the editor and you will see lines which were hit highlighted in the gutter.

In fact, these highlights will continue to be shown as you navigate around your source code. I’m leaving the “Code Coverage: Off/Previous/All” item in the status bar as this highlights each whole line and is much easier to see if you want to zoom out and get an impression of coverage of the whole file.

Coverage from Previous Runs

Coverage from previous test runs is stored and can be accessed from the Test Results pane (usually shown at the bottom of the screen). It might be useful to switch between test coverage results for test runs to see how to coverage % has changed over time (with the usual caveat about not using code coverage as a target).

The decorations in the gutter to indicate which lines have been covered in the current file are only shown when the latest test coverage is being displayed. That makes sense because the code coverage detail is all based on line numbers. Once you’ve made some changes to a file those line numbers are obsolete.